Apple Child Safety Features

By MacRumors Staff

Apple Child Safety Features How Tos

iOS 17: How to Enable Sensitive Content Warnings

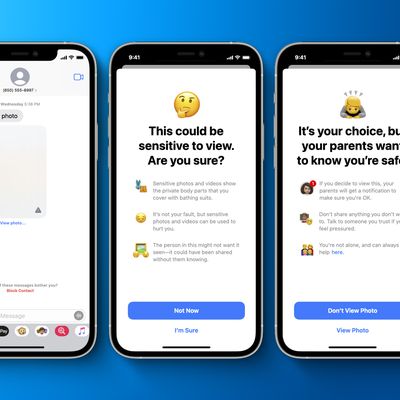

In iOS 17 and iPadOS 17, Apple has introduced Sensitive Content Warnings to prevent unsolicited nude photos and similar content coming through to your iPhone or iPad. Here's how to enable the feature.

With Sensitive Content Warnings enabled in iOS 17, incoming files, videos, and images are scanned on-device and blocked if they contain nudity. The opt-in blurring can be applied to images in...

Read Full Article

iOS 17: How to Manage Communication Safety for Your Child's iPhone

With the introduction of iOS 17 and iPadOS 17, Apple turned on Communication Safety, an opt-in feature that warns children when sending or receiving photos that contain nudity. It is enabled by default for children under the age of 13 who are signed in with an Apple ID and part of a Family Sharing group, but parents can also enable it for older teens as well.

Communication Safety first...

Apple Child Safety Features Articles

Craig Federighi Acknowledges Confusion Around Apple Child Safety Features and Explains New Details About Safeguards

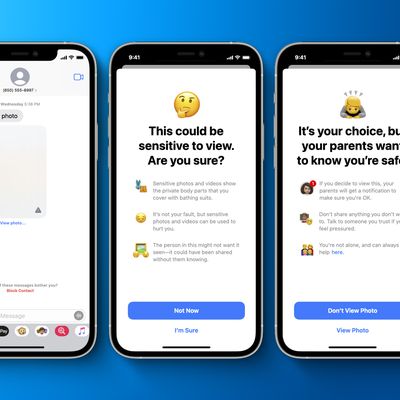

Apple's senior vice president of software engineering, Craig Federighi, has today defended the company's controversial planned child safety features in a significant interview with The Wall Street Journal, revealing a number of new details about the safeguards built into Apple's system for scanning users' photos libraries for Child Sexual Abuse Material (CSAM).

Federighi admitted that Apple...

Apple Employees Internally Raising Concerns Over CSAM Detection Plans

Apple employees are now joining the choir of individuals raising concerns over Apple's plans to scan iPhone users' photo libraries for CSAM or child sexual abuse material, reportedly speaking out internally about how the technology could be used to scan users' photos for other types of content, according to a report from Reuters.

According to Reuters, an unspecified number of Apple employees ...

Apple Remains Committed to Launching New Child Safety Features Later This Year

Last week, Apple previewed new child safety features that it said will be coming to the iPhone, iPad, and Mac with software updates later this year. The company said the features will be available in the U.S. only at launch.

A refresher on Apple's new child safety features from our previous coverage:First, an optional Communication Safety feature in the Messages app on iPhone, iPad, and Mac...

Apple Privacy Chief Explains 'Built-in' Privacy Protections in Child Safety Features Amid User Concerns

Apple's Head of Privacy, Erik Neuenschwander, has responded to some of users' concerns around the company's plans for new child safety features that will scan messages and Photos libraries, in an interview with TechCrunch.

When asked why Apple is only choosing to implement child safety features that scan for Child Sexual Abuse Material (CSAM), Neuenschwander explained that Apple has "now got ...

Facebook's Former Security Chief Discusses Controversy Around Apple's Planned Child Safety Features

Amid the ongoing controversy around Apple's plans to implement new child safety features that would involve scanning messages and users' photos libraries, Facebook's former security chief, Alex Stamos, has weighed into the debate with criticisms of multiple parties involved and suggestions for the future.

In an extensive Twitter thread, Stamos said that there are "no easy answers" in the...

Apple Open to Expanding New Child Safety Features to Third-Party Apps

Apple today held a questions-and-answers session with reporters regarding its new child safety features, and during the briefing, Apple confirmed that it would be open to expanding the features to third-party apps in the future.

As a refresher, Apple unveiled three new child safety features coming to future versions of iOS 15, iPadOS 15, macOS Monterey, and/or watchOS 8.

Apple's New Child ...

Apple Publishes FAQ to Address Concerns About CSAM Detection and Messages Scanning

Apple has published a FAQ titled "Expanded Protections for Children" which aims to allay users' privacy concerns about the new CSAM detection in iCloud Photos and communication safety for Messages features that the company announced last week.

"Since we announced these features, many stakeholders including privacy organizations and child safety organizations have expressed their support of...

Apple Addresses CSAM Detection Concerns, Will Consider Expanding System on Per-Country Basis

Apple this week announced that, starting later this year with iOS 15 and iPadOS 15, the company will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children, a non-profit organization that works in collaboration with law enforcement agencies across the United...

Privacy Whistleblower Edward Snowden and EFF Slam Apple's Plans to Scan Messages and iCloud Images

Apple's plans to scan users' iCloud Photos library against a database of child sexual abuse material (CSAM) to look for matches and childrens' messages for explicit content has come under fire from privacy whistleblower Edward Snowden and the Electronic Frontier Foundation (EFF).

In a series of tweets, the prominent privacy campaigner and whistleblower Edward Snowden highlighted concerns...

Apple Confirms Detection of Child Sexual Abuse Material is Disabled When iCloud Photos is Turned Off

Apple today announced that iOS 15 and iPadOS 15 will see the introduction of a new method for detecting child sexual abuse material (CSAM) on iPhones and iPads in the United States.

User devices will download an unreadable database of known CSAM image hashes and will do an on-device comparison to the user's own photos, flagging them for known CSAM material before they're uploaded to iCloud...

Security Researchers Express Alarm Over Apple's Plans to Scan iCloud Images, But Practice Already Widespread

Apple today announced that with the launch of iOS 15 and iPadOS 15, it will begin scanning iCloud Photos in the U.S. to look for known Child Sexual Abuse Material (CSAM), with plans to report the findings to the National Center for Missing and Exploited Children (NCMEC).

Prior to when Apple detailed its plans, news of the CSAM initiative leaked, and security researchers have already begun...

Apple Introducing New Child Safety Features, Including Scanning Users' Photo Libraries for Known Sexual Abuse Material

Apple today previewed new child safety features that will be coming to its platforms with software updates later this year. The company said the features will be available in the U.S. only at launch and will be expanded to other regions over time.

Communication Safety

First, the Messages app on the iPhone, iPad, and Mac will be getting a new Communication Safety feature to warn children...