Apple Child Safety Features

By MacRumors Staff

Apple Child Safety Features How Tos

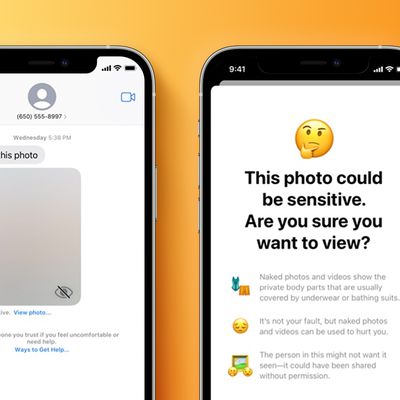

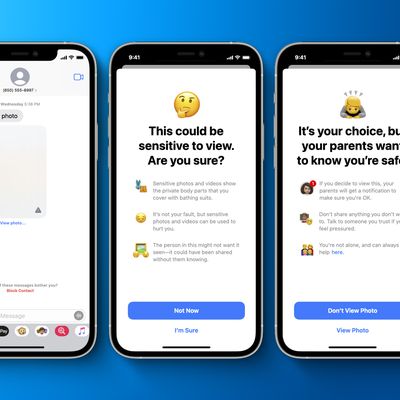

iOS 17: How to Enable Sensitive Content Warnings

In iOS 17 and iPadOS 17, Apple has introduced Sensitive Content Warnings to prevent unsolicited nude photos and similar content coming through to your iPhone or iPad. Here's how to enable the feature.

With Sensitive Content Warnings enabled in iOS 17, incoming files, videos, and images are scanned on-device and blocked if they contain nudity. The opt-in blurring can be applied to images in...

Read Full Article

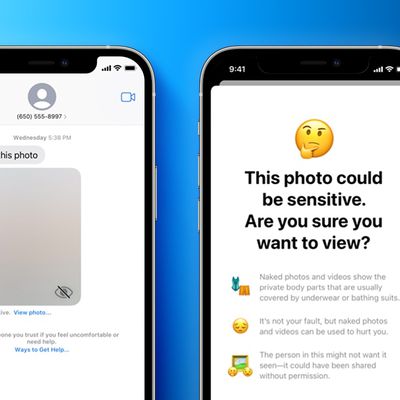

iOS 17: How to Manage Communication Safety for Your Child's iPhone

With the introduction of iOS 17 and iPadOS 17, Apple turned on Communication Safety, an opt-in feature that warns children when sending or receiving photos that contain nudity. It is enabled by default for children under the age of 13 who are signed in with an Apple ID and part of a Family Sharing group, but parents can also enable it for older teens as well.

Communication Safety first...

Apple Child Safety Features Articles

Apple Hit With $1.2B Lawsuit Over Abandoned CSAM Detection System

Apple is facing a lawsuit seeking $1.2 billion in damages over its decision to abandon plans for scanning iCloud photos for child sexual abuse material (CSAM), according to a report from The New York Times.

Filed in Northern California on Saturday, the lawsuit represents a potential group of 2,680 victims and alleges that Apple's failure to implement previously announced child safety tools...

Apple Provides Further Clarity on Why It Abandoned Plan to Detect CSAM in iCloud Photos

Apple on Thursday provided its fullest explanation yet for last year abandoning its controversial plan to detect known Child Sexual Abuse Material (CSAM) stored in iCloud Photos.

Apple's statement, shared with Wired and reproduced below, came in response to child safety group Heat Initiative's demand that the company "detect, report, and remove" CSAM from iCloud and offer more tools for...

iOS 17 Expands Communication Safety Worldwide, Turned On by Default

Starting with iOS 17, iPadOS 17, and macOS Sonoma, Apple is making Communication Safety available worldwide. The previously opt-in feature will now be turned on by default for children under the age of 13 who are signed in to their Apple ID and part of a Family Sharing group. Parents can turn it off in the Settings app under Screen Time.

Communication Safety first launched in the U.S. with...

Apple's Communication Safety Feature for Children Expanding to 6 New Countries

Earlier this month, we noted Apple was working to expand its Communication Safety feature to additional countries, and it appears the next round of expansion will include six new countries.

Communication Safety is an opt-in feature in the Messages app across Apple's platforms that is designed to warn children when receiving or sending photos that contain nudity. In the coming weeks, it will...

Apple Expanding iPhone's Communication Safety Feature to More Countries

In recognition of Safer Internet Day today, Apple has highlighted the company's software features and tools designed to protect children online, such as Screen Time and Communication Safety. The press release was shared in Europe only.

Communication Safety is an opt-in feature in the Messages app on the iPhone, iPad, Mac, and Apple Watch that is designed to warn children when receiving or...

Apple Remains Silent About Plans to Detect Known CSAM Stored in iCloud Photos

It has now been over a year since Apple announced plans for three new child safety features, including a system to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, an option to blur sexually explicit photos in the Messages app, and child exploitation resources for Siri. The latter two features are now available, but Apple remains silent about its plans for the CSAM...

European Commission to Release Draft Law Enforcing Mandatory Detection of Child Sexual Abuse Material on Digital Platforms

The European Commission is set to release a draft law this week that could require tech companies like Apple and Google to identify, remove and report to law enforcement illegal images of child abuse on their platforms, claims a new report out today.

According to a leak of the proposal obtained by Politico, the EC believes voluntary measures taken by some digital companies have thus far...

Apple's Messages Communication Safety Feature for Kids Expanding to the UK, Canada, Australia, and New Zealand

Apple is planning to expand its Communication Safety in Messages feature to the UK, according to The Guardian. Communication Safety in Messages was introduced in the iOS 15.2 update released in December, but the feature has been limited to the United States until now.

Communication Safety in Messages is designed to scan incoming and outgoing iMessage images on children's devices for nudity...

Apple Removes All References to Controversial CSAM Scanning Feature From Its Child Safety Webpage [Updated]

Apple has quietly nixed all mentions of CSAM from its Child Safety webpage, suggesting its controversial plan to detect child sexual abuse images on iPhones and iPads may hang in the balance following significant criticism of its methods.

Apple in August announced a planned suite of new child safety features, including scanning users' iCloud Photos libraries for Child Sexual Abuse Material...

Apple's Proposed Phone-Scanning Child Safety Features 'Invasive, Ineffective, and Dangerous,' Say Cybersecurity Researchers in New Study

More than a dozen prominent cybersecurity experts hit out at Apple on Thursday for relying on "dangerous technology" in its controversial plan to detect child sexual abuse images on iPhones (via The New York Times).

The damning criticism came in a new 46-page study by researchers that looked at plans by Apple and the European Union to monitor people's phones for illicit material, and called...

EFF Flew a Banner Over Apple Park During Last Apple Event to Protest CSAM Plans

In protest of the company's now delayed CSAM detection plans, the EFF, which has been vocal about Apple's child safety features plans in the past, flew a banner over Apple Park during the iPhone 13 event earlier this month with a message for the Cupertino tech giant.

During Apple's fully-digital "California streaming" event on September 14, which included no physical audience attendance in...

EFF Pressures Apple to Completely Abandon Controversial Child Safety Features

The Electronic Frontier Foundation has said it is "pleased" with Apple's decision to delay the launch of of its controversial child safety features, but now it wants Apple to go further and completely abandon the rollout.

Apple on Friday said it was delaying the planned features to "take additional time over the coming months to collect input and making improvements," following negative...

Apple Delays Rollout of Controversial Child Safety Features to Make Improvements

Apple has delayed the rollout of the Child Safety Features that it announced last month following negative feedback, the company has today announced.

The planned features include scanning users' iCloud Photos libraries for Child Sexual Abuse Material (CSAM), Communication Safety to warn children and their parents when receiving or sending sexually explicit photos, and expanded CSAM guidance...

University Researchers Who Built a CSAM Scanning System Urge Apple to Not Use the 'Dangerous' Technology

Respected university researchers are sounding the alarm bells over the technology behind Apple's plans to scan iPhone users' photo libraries for CSAM, or child sexual abuse material, calling the technology "dangerous."

Jonanath Mayer, an assistant professor of computer science and public affairs at Princeton University, as well as Anunay Kulshrestha, a researcher at Princeton University...

Global Coalition of Policy Groups Urges Apple to Abandon 'Plan to Build Surveillance Capabilities into iPhones'

An international coalition of more than 90 policy and rights groups published an open letter on Thursday urging Apple to abandon its plans to "build surveillance capabilities into iPhones, iPads, and other products" – a reference to the company's intention to scan users' iCloud photo libraries for images of child sex abuse (via Reuters).

"Though these capabilities are intended to protect...

Apple Says NeuralHash Tech Impacted by 'Hash Collisions' Is Not the Version Used for CSAM Detection

Developer Asuhariet Yvgar this morning said that he had reverse-engineered the NeuralHash algorithm that Apple is using to detect Child Sexual Abuse Materials (CSAM) in iCloud Photos, posting evidence on GitHub and details on Reddit.

Yvgar said that he reverse-engineered the NeuralHash algorithm from iOS 14.3, where the code was hidden, and he rebuilt it in Python. After he uploaded his...

German Politician Asks Apple CEO Tim Cook to Abandon CSAM Scanning Plans

Member of the German parliament, Manuel Höferlin, who serves as the chairman of the Digital Agenda committee in Germany, has penned a letter to Apple CEO Tim Cook, pleading Apple to abandon its plan to scan iPhone users' photo libraries for CSAM (child sexual abuse material) images later this year.

In the two-page letter (via iFun), Höferlin said that he first applauds Apple's efforts to...

Corellium Launching New Initiative to Hold Apple Accountable Over CSAM Detection Security and Privacy Claims

Security research firm Corellium this week announced it is launching a new initiative that will "support independent public research into the security and privacy of mobile applications," and one of the initiative's first projects will be Apple's recently announced CSAM detection plans.

Since its announcement earlier this month, Apple's plan to scan iPhone users' photo libraries for CSAM or...

Apple Outlines Security and Privacy of CSAM Detection System in New Document

Apple today shared a document that provides a more detailed overview of the child safety features that it first announced last week, including design principles, security and privacy requirements, and threat model considerations.

Apple's plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos has been particularly controversial and has prompted concerns from...

Craig Federighi Acknowledges Confusion Around Apple Child Safety Features and Explains New Details About Safeguards

Apple's senior vice president of software engineering, Craig Federighi, has today defended the company's controversial planned child safety features in a significant interview with The Wall Street Journal, revealing a number of new details about the safeguards built into Apple's system for scanning users' photos libraries for Child Sexual Abuse Material (CSAM).

Federighi admitted that Apple...