Apple has made another addition to its growing AI repertoire with the creation of a tool that leverages large language models (LLMs) to animate static images based on a user's text prompts.

Apple describes the innovation in a new research paper titled "Keyframer: Empowering Animation Design Using Large Language Models."

"While one-shot prompting interfaces are common in commercial text-to-image systems like Dall·E and Midjourney, we argue that animations require a more complex set of user considerations, such as timing and coordination, that are difficult to fully specify in a single prompt—thus, alternative approaches that enable users to iteratively construct and refine generated designs may be needed especially for animations.

"We combined emerging design principles for language-based prompting of design artifacts with code-generation capabilities of LLMs to build a new AI-powered animation tool called Keyframer. With Keyframer, users can create animated illustrations from static 2D images via natural language prompting. Using GPT-4 3, Keyframer generates CSS animation code to animate an input Scalable Vector Graphic (SVG)."

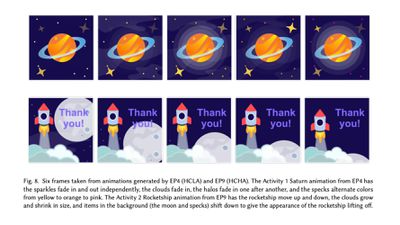

To create an animation, the user uploads an SVG image – of a space rocket, say – then types in a prompt like "generate three designs where the sky fades into different colors and the stars twinkle." Keyframer then generates CSS code for the animation, and the user can then refine it by editing the code directly or by entering additional text prompts.

"Keyframer enabled users to iteratively refine their designs through sequential prompting, rather than having to consider their entire design upfront," explain the authors. "Through this work, we hope to inspire future animation design tools that combine the powerful generative capabilities of LLMs to expedite design prototyping with dynamic editors that enable creators to maintain creative control."

According to the paper, the research was informed by interviews with professional animation designers and engineers. "I think this was much faster than a lot of things I've done," said one of the study participants quoted in the paper. "I think doing something like this before would have just taken hours to do."

The innovation is just the latest in a series of AI breakthroughs by Apple. Last week, Apple researchers released an AI model that harnesses the power of multimodal LLMs to perform pixel-level edits on images.

In late December, Apple also revealed that it had made strides in deploying LLMs on iPhones and other Apple devices with limited memory by inventing an innovative flash memory utilization technique.

Both The Information and analyst Jeff Pu have said that Apple will have some kind of generative AI feature available on the iPhone and iPad later in the year, when iOS 18 is released. The next version of Apple's mobile software is said to include an enhanced version of Siri with ChatGPT-like generative AI functionality, and has the potential to be the "biggest" update in the iPhone's history, according to Bloomberg reporter Mark Gurman.

(Via VentureBeat.)