Update: We've learned from Apple that the Communication Safety code found in the first iOS 15.2 beta is not a feature in that update and Apple does not plan to release the feature as it is described in the article.

Apple this summer announced new Child Safety Features that are designed to keep children safer online. One of those features, Communication Safety, appears to be included in the iOS 15.2 beta that was released today. This feature is distinct from the controversial CSAM initiative, which has been delayed.

Based on code found in the iOS 15.2 beta by MacRumors contributor Steve Moser, Communication Safety is being introduced in the update. The code is there, but we have not been able to confirm that the feature is active because it requires sensitive photos to be sent to or from a device set up for a child.

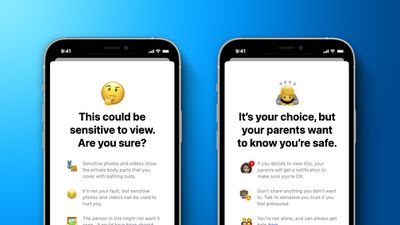

As Apple explained earlier this year, Communication Safety is built into the Messages app on iPhone, iPad, and Mac. It will warn children and their parents when sexually explicit photos are received or sent from a child's device, with Apple using on-device machine learning to analyze image attachments.

If a sexually explicit photo is flagged, it is automatically blurred and the child is warned against viewing it. For kids under 13, if the child taps the photo and views it anyway, the child's parents will be alerted.

Code in iOS 15.2 features some of the wording that children will see.

- You are not alone and can always get help from a grownup you trust or with trained professionals. You can also block this person.

- You are not alone and can always get help from a grownup you trust or with trained professionals. You can also leave this conversation or block contacts.

- Talk to someone you trust if you feel uncomfortable or need help.

- This photo will not be shared with Apple, and your feedback is helpful if it was incorrectly marked as sensitive.

- Message a Grownup You Trust.

- Hey, I would like to talk with you about a conversation that is bothering me.

- Sensitive photos and videos show the private body parts that you cover with bathing suits.

- It's not your fault, but sensitive photos can be used to hurt you.

- The person in this may not have given consent to share it. How would they feel knowing other people saw it?

- The person in this might not want it seen-it could have been shared without them knowing. It can also be against the law to share.

- Sharing nudes to anyone under 18 years old can lead to legal consequences.

- If you decide to view this, your parents will get a notification to make sure you're OK.

- Don't share anything you don't want to. Talk to someone you trust if you feel pressured.

- Do you feel OK? You're not alone and can always talk to someone who's trained to help here.

There are specific phrases for both children under 13 and children over 13, as the feature has different behaviors for each age group. As mentioned above, if a child over 13 views a nude photo, their parents will not be notified, but if a child under 13 does so, parents will be alerted. All of these Communication Safety features must be enabled by parents and are available for Family Sharing groups.

- Nude photos and videos can be used to hurt people. Once something's shared, it can't be taken back.

- It's not your fault, but sensitive photos and videos can be used to hurt you.

- Even if you trust who you send this to now, they can share it forever without your consent.

- Whoever gets this can share it with anyone-it may never go away. It can also be against the law to share.

Apple in August said that these Communication Safety features would be added in updates to iOS 15, iPadOS 15, and macOS Monterey later this year, and iMessage conversations remain end-to-end encrypted and are not readable by Apple.

Communication Safety was also announced alongside a new CSAM initiative that will see Apple scanning photos for Child Sexual Abuse Material. This has been highly controversial and heavily criticized, leading Apple to choose to "take additional time over the coming months" to make improvements before introducing the new functionality.

At the current time, there is no sign of CSAM wording in the iOS 15.2 beta, so Apple may first introduce Communication Safety before implementing the full suite of Child Safety Features.