A number of Apple patent applications, published earlier today, appear to be directly related to its long-rumored mixed reality headset, covering a range of aspects including design elements, lens adjustment, eye-tracking technology, and even software.

The patents, filed with the U.S. Patent and Trademark Office by Apple, were made public earlier today and seemingly relate to specific features of its mixed-reality headset product.

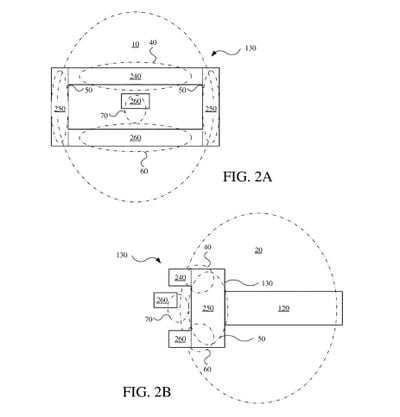

Firstly, Apple applied for a patent related to several of the design elements of a head-mounted display unit in a filing titled "Head-Mounted Display Unit With Adaptable Facial Interface." This filing attempts to explain how a number of individual design elements can prevent a headset from being moved around by facial movements, increase the ability of a user to move their face when wearing a headset, and improve general comfort.

In supporting a headset on both the upper and lower facial regions separately, Apple seeks to reduce tension and facial compression for a more comfortable fit. This is achieved with "a light seal that conforms to the face of the user," which also blocks out environmental light, various facial supports of different stiffnesses, and even a "sprung" lower section. Among the recent deluge of reports surrounding Apple's mixed reality headset was The Information's remark that Apple is using a supportive "mesh material" around the headset for comfort.

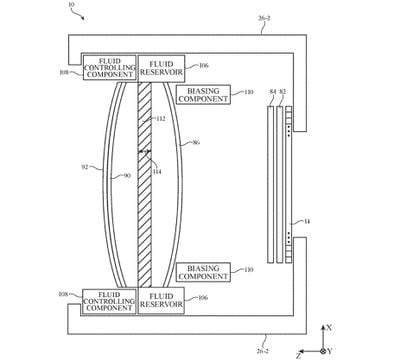

In another application published today titled "Electronic Device With A Tunable Lens," Apple describes a lens-adjustment system for a head-mounted display unit. To present content optimally to a specific wearer, the optical lenses inside a VR/AR headset usually have to be adjusted.

Apple's system for adjusting lenses involves using a first and second lens element "separated by a liquid-filled gap with an adjustable thickness." In modulating how much fluid is allowed in this gap, the headset is able to move the lenses closer together or further apart to be suitable for a specific user. Unlike many other VR headsets, which require users to manually move lenses, Apple's system is entirely electronic and controlled by actuators. Lens elements may also be "semi-rigid" to be able to adjust their curvature as needed.

Earlier this month, The Information claimed that Apple's headset would feature "advanced technology for eye tracking." Now, a new patent application from Apple simply titled "Eye Tracking System," outlines a process to detect the position and movement of a user's eyes in a head-mounted display unit.

The eye tracking system includes at least one eye tracking camera, an illumination source that emits infrared light towards the user's eyes, and diffraction gratings located at the eyepieces. The diffraction gratings redirect or reflect at least a portion of infrared light reflected off the user's eyes, while allowing visible light to pass. The cameras capture images of the user's eyes from the infrared light that is redirected or reflected by the diffraction gratings.

Infrared cameras and a light source can be placed within the headset behind the lenses to detect infrared light bounced off of a user's eyes. The "diffraction grafting," placed between the camera and a user's eye, may take the form of a thin holographic film laminated to the lens, and serves to direct the light from a user's eye directly to the camera, while allowing visible light from the headset's display to pass through as normal. The IR camera would need to be placed at the edges of the display panels, near to a user's cheekbones.

![]()

The filing goes on to describe in detail how this system allows the headset to precisely locate and track the movement of a user's "point of gaze." The system is so accurate that it is even able to detect pupil dilation. In terms of software applications for eye tracking, Apple remains vague, but it does suggest that the technology could be used for "gaze-based interactions" such as creating eye "animations used in avatars in a VR/AR environment."

The Information said that Apple's mixed reality headset will use "cameras on the device, the headset will also be able to respond to the eye movements and hand gestures of the wearer." It is also of note that in 2017, Apple acquired SensoMotoric Instruments, a German firm that made eye-tracking technology for VR headsets. One purpose suggested by The Information was as follows:

Apple has for years worked on technology that uses eye tracking to fully render only parts of the display where the user is looking. That would let the headset show lower-quality graphics in the user's peripheral vision and reduce the device's computing needs, according to people with knowledge of the efforts.

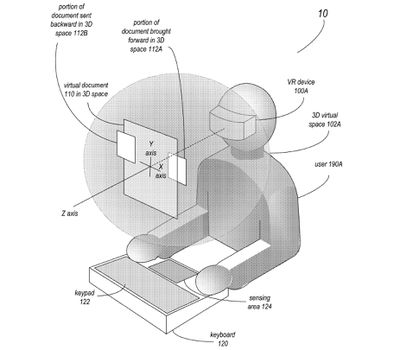

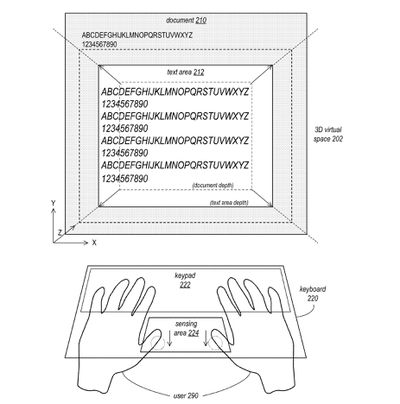

Finally, Apple has revealed a user interface concept for "a virtual reality and/or augmented reality device," in a filing called "3D Document Editing System." The patent application sets out how documents can be edited in a virtual 3D space. The system involves a keyboard being paired with the headset via Bluetooth or a wired connection to edit text.

The VR device may be configured to display a 3D text generation and editing GUI in a virtual space that includes a virtual screen for entering or editing text in documents via a keypad of the input device. Unlike conventional 2D graphical user interfaces, using embodiments of the 3D document editing system, a text area or text field of a document can be placed at or moved to various Z-depths in the 3D virtual space.

The filing adds that the headset may be able to detect a user's gestures to move selected content in a document such as highlighted text, shapes, or text boxes, and explains that users could move document elements in three dimensions on a Z-axis. It goes on to list a number of specific finger gestures to perform specific actions in a 3D text editor application.

In some embodiments, a document generated using the 3D text editing system may be displayed to content consumers in a 3D virtual space via VR devices, with portions of the document (e.g., paragraphs, text boxes, URLs, sentences, words, sections, columns, etc.) shifted backward or forward on the Z axis relative to the rest of the content in the document to highlight or differentiate those parts of the document. For example, active text fields or hot links such as URLs may be moved forward relative to other content in the document so that they are more visible and easier to access by the consumer in the 3D virtual space using a device such as a controller or hand gestures.

The patent application also proposes that the headset could pass through a view of the user's real-world environment in the document editing software.

In some embodiments, the VR device may also provide augmented reality (AR) or mixed reality (MR) by combining computer generated information with a view of the user's environment to augment, or add content to, a user's view of the world. In these embodiments, the 3D text generation and editing GUI may be displayed in an AR or MR view of the user's environment.

This is similar to The Information's claim that the "cameras on the device will be able to pass video of the real world through the visor and display it on screens to the person wearing the headset, creating a mixed-reality effect," and the general parity of these patent applications to recent rumors about Apple's mixed reality headset is striking. While patent applications cannot be taken as evidence of the exact technologies Apple is planning to bring to consumer products, it is difficult to look past the way in which these patents fit into the bigger picture surrounding Apple's AR/VR project.

Analyst Ming-Chi Kuo has said that Apple will reveal an augmented reality device this year, and according to JP Morgan, the device will launch in the first quarter of 2022. The headset is expected to be priced around $3,000, competing with the likes of Microsoft's HoloLens 2, which costs $3,500. Yesterday, designer Antonio De Rosa shared photorealistic renders of what Apple's mixed reality headset is believed to look like based on recent rumors.