When Apple introduced iOS 10, macOS Sierra, watchOS 3, and tvOS 10 at the 2016 Worldwide Developers Conference, it also announced plans to implement a new technology called Differential Privacy, which helps the company gather data and usage patterns for a large number of users without compromising individual security.

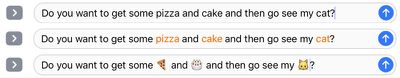

At the time, Apple said Differential Privacy would be used in iOS 10 to collect data to improve QuickType and emoji suggestions, Spotlight deep link suggestions, and Lookup Hints in Notes, and said it would be used in macOS Sierra to improve autocorrect suggestions and Lookup Hints.

There's been a lot of confusion about differential privacy and what it means for end users, leading Recode to write a piece that clarifies many of the details of differential privacy.

First and foremost, as with all of Apple's data collection, there is an option to opt out of sharing data with the company. Differential data collection is entirely opt in and users can decide whether or not to send data to Apple.

Apple will start collecting data starting in iOS 10, and has not been doing so already, and it also will not use the cloud-stored photos of iOS users to bolster image recognition capabilities in the Photos app.

As for what data is being collected, Apple says that differential privacy will initially be limited to four specific use cases: New words that users add to their local dictionaries, emojis typed by the user (so that Apple can suggest emoji replacements), deep links used inside apps (provided they are marked for public indexing) and lookup hints within notes.

Apple will also continue to do a lot of its predictive work on the device, something it started with the proactive features in iOS 9. This work doesn't tap the cloud for analysis, nor is the data shared using differential privacy.

Apple's deep concern for user privacy has put its services like Siri behind competing services from other companies, but Differential Privacy gives the company a way to collect useful data without compromising the security of its customer base.

As Apple's VP of software engineering Craig Federighi explained at the WWDC keynote, Differential privacy uses hashing, subsampling, and noise injection to enable crowd-sourced learning without simultaneously gathering data on individual people.

?" That certainly needs a Privacy feature.

?" That certainly needs a Privacy feature.