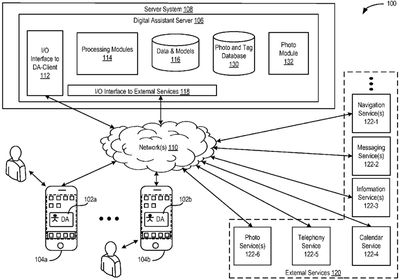

Siri may be able to search through and tag user photos in the future, according to a new Apple patent application published today by the United States Patent and Trademark Office (via AppleInsider). Entitled "Voice-based Image Tagging and Searching," the application describes a system allowing digital images to be tagged with various identifiers like the name of a person, a location, or an activity in a photograph, which can then be searched for via voice commands given to a "voice-based digital assistant," aka Siri.

A user could, for example, take a photograph and then speak a description of what is in the photograph. Saying a phrase like “this is me at the beach” would automatically tag a photo with the appropriate information, which could later be recalled with a simple voice-based search. Apple specifies that beyond spoken information, additional tags could be added such as a user name and location.

Moreover, because the natural-language processing is capable of inferring additional information, the tags may include additional information that the user did not explicitly say (such as the name of the person to which "me" refers), and which creates a more complete and useful tag.

Once a photograph is tagged using the disclosed tagging techniques, other photographs that are similar may be automatically tagged with the same or similar information, thus obviating the need to tag every similar photograph individually. And when a user wishes to search among his photographs, he may simply speak a request: "show me photos of me at the beach."

According to Apple, the ever increasing number of digital photographs stored on electronic devices like the iPhone have created a need for "systematic cataloging" to facilitate improved organization and simpler image searches. Current approaches to photo tagging, Apple says, are "non-intuitive, arduous, and time-consuming," with voice-based photo tagging representing a dramatic increase in both the speed and convenience of photo tagging.

Beyond recording information based on spoken text strings, Apple also suggests that its system could automatically tag photographs based on previously captured user images and data, recognizing faces, buildings, and landscapes. Based on the stored photographic data, users could then conduct voice searches via Siri, quickly locating all relevant images.

Apple first began improving its photo organizational systems with iOS 7, revamping the Photos app with information on when and where photos were taken. Images within the app can be organized into "Moments" using this information, which already provides a solid basis for the addition of photo searches to Siri in the future.

The patent application, which was originally filed on March 13, 2013 and published today, lists former Apple employee Jan Erik Solem and Thijs Willem Stalenhoef as inventors. As with all of Apple's patents and patent applications, it is unclear when and if the technology will make it into a final product.