Apple has been conducting ongoing research into how to further improve their mobile device interfaces as evidenced by a couple of patent applications published over the past couple of weeks. Two different patent applications reveal a couple of different approaches to movement-aware interfaces found on portable devices.

The first application published a couple of weeks ago actually explores the possibility of using motion as an interface method itself.

One problem with existing portable media devices such as cellular telephones is that users can become distracted from other activities while interfacing with the media device's video display, graphical user interface (GUI), and/or keypad. For example, a runner may carry a personal media device to listen to music or to send/receive cellular telephone calls while running. In a typical personal media device, the runner must look at the device's display to interact with a media application in order to select a song for playing. Also, the user likely must depress the screen or one or more keys on its keypad to perform the song selection. These interactions with the personal media device can divert the user's attention from her surroundings which could be dangerous, force the user to interrupt her other activities, or cause the user to interfere with the activities of others within her surroundings.

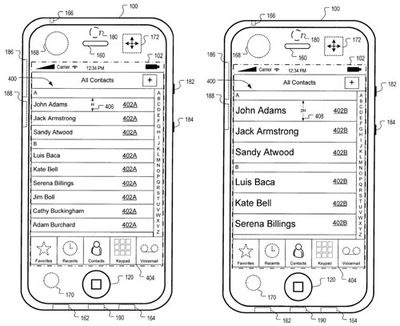

Apple proposes the use of motion-based gestures to invoke specific commands rather than relying on on-screen buttons. Examples include the use of gestures such as flicking the phone to step through contacts. Additional uses of onscreen buttons or bezel touch detection could prevent accidental gesturing. Such a system, however, seems somewhat ambitious.

A more practical take on this problem emerges from a patent application published today. In this report, Apple concedes that users may have difficulty using the iPhone's touch interface while performing tasks in motion:

. A user of a device can interact with the graphical user interface by making contact with the touch-sensitive display. The device, being a portable device, can also be carried and used by a user while the user is in motion. While the user and the device is in motion, the user's dexterity with respect to the touch-sensitive display can be disrupted by the motion, detracting form the user's experience with the graphical user interface.

Apple's solution to the problem is to modify the iPhone's interface in real-time if it detects that you are in motion (such as running, jogging).

In this example, they enlarge the size of each contact in response to the detection of motion. Similar user interface adjustments to the iPhone's home screen could be made as well to improve accuracy during activity.

Apple's patent applications generally reveal a very wide-range of possibilities and don't necessarily result in shipping products, but does show the direction of Apple's recent research.

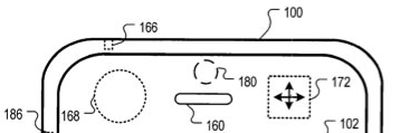

Update: As one reader points out, the patent diagram also depicts a front-facing video camera on the front side of the device (labeled 180). A front facing camera could allow video-chat capabilities in future iPhones.

The other labeled sensors are: proximity sensor, ambient light sensor, and accelerometer. Apple also mentions the possible use of a gyroscope (digital compass/magnetometer) -- the implications of which were previously detailed.