Apple's patent applications provide a glimpse into what might lie ahead in our future. For example, we saw an explosion of multi-touch patents in the year before the iPhone's release. And while many of these concepts may never come to market, they provide an interesting look into the direction of Apple's research.

One of the most recent patent applications from Apple is entitled Multitouch Data Fusion and is authored by Wayne Westerman and John Elias (formerly of Fingerworks). Westerman and Elias have been prolific publishers of multi-touch patent applications and likely helped establish the multi-touch technology behind Apple's iPhone. While many have since hoped to see some sort of advanced multi-touch interface for Apple's Macs, only limited multi-touch support has been included into Apple's notebooks.

In Multitouch Data Fusion, however, Westerman and Elias are already exploring the use other inputs to help improve and augment multi-touch interfaces. These include:

- Voice recognition

- Finger identification

- Gaze vector

- Facial expression

- Handheld device movement

- Biometrics (body temp, heart rate, skin impedance, pupil size)

The patent application gives examples of how each of these could be used in conjunction with multi-touch to provide a better user experience. A few highlights are provided here:

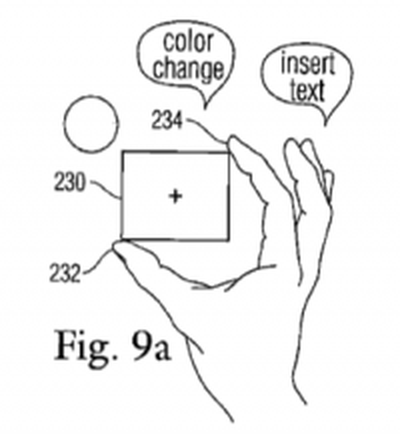

Voice - Some tasks are described as being better suited for either voice recognition vs multi-touch. For example, if a user's task is to resize, rotate and change the color of an object on the screen, multi-touch would be best suited for the resize/rotation tasks but changing of color (or inserting text) may be better suited to a voice command.

Finger identification - the article suggests that using built in cameras (such as the iSight) with a swing mirror could provide an over the keyboard view of multi-touch gestures. This video information could be used to better distinguish which fingers are being used in which position. In conjunction with the touch input, this could be used to create more specific and accurate gestures.

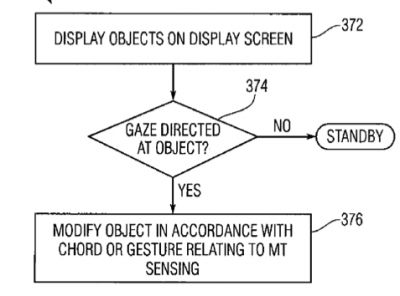

Gaze - tracking where the user is looking could help pick windows or objects on the screen. Rather than resorting to moving a mouse pointer to the proper window, a user could simply direct his gaze at the particular window and then invoke a touch gesture.

Facial expression - detecting frustration on the face of a user could provide help prompts or even alter input behavior. The example given is if a user is incorrectly trying to scroll a window using 3 fingers, instead of 2, the computer may be able to detect the frustration and either accept the faulty input or prompt the user.

Many of these technologies are likely years away from the market, but continue to provide an interesting peek into Apple's future.